Logics

Logics are a system where behaviors can be defined and attached to objects, similar to the Logics that were used in DarkXL. Rather then having an object have fixed behavior – objects are basically containers to which components and behaviors can be attached. Logics are contained behavior modules that can be implemented in either code – in the game DLL – or in script. These logics are then referenced by name and can be added or removed from objects at runtime. In order for Logics to affect objects they have various callbacks that may be called, such as Update (if the object is currently active) and can also receive messages, though during setup the Logics must opt in to the messages that they wish to receive.

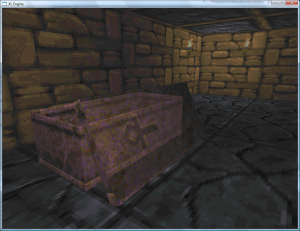

The basic Logic that a game needs is the player itself, which is currently implemented in the game DLL. The player Logic is no different to the Engine then any other Logic but it is where the player behavior is controlled – including input that may affect the player. Other, non-player affecting input is handled by the UI script that runs the “GameView.” For DaggerXL I’ve also implemented the Logics that control Doors, switches and other interactive objects inside the dungeons. Basically this means that the dungeon interaction is nearly finished and I’ll probably be moving on to exteriors in the coming week and finally getting everything back to where it should be. ![]()

Another area that I’ve been thinking about is the editor that will be coming after these sets of builds are complete, as you’ll see below.

Logics define the data that they need, per object, in order to operate. This data can simply be space to store state, such as the current animation time. However this data is oftentimes derived from the objects themselves or loaded data. In order to link data that the logic requests per-object to the engine data semantics are used. As data is loaded or defined, a particular variable may register a semantic with the Logic/script system. When a Logic is assigned to an object the semantics are matched up and the data setup accordingly. But how do objects receive Logics? For existing data, the data format loader manually assigns logics based on the object type. For example “Logic_Door” is used for door objects in Daggerfall. When the editor comes online, the user will be able to assign whatever Logic they want – it is simply stored as a name string. In fact an object can have any number of Logics, which simply are processed in the order that they are added. In addition there will be editor semantics for the Logic data declaration – and these semantics and UI meta data defined inside the scripts or code will be used to generate the editor UI needed to assign data to an object in the editor.

As an example, let’s say you wanted to add a new door type for DaggerXL. You would create a new Logic script file where you create the Logic. In the data declaration you define the data the Logic needs per-object and assign the appropriate semantics and UI meta data. You can make the UI use edit boxes, sliders, check boxes or other appropriate control including sensible limits. Then simply include this Logic file in the Core Logics UI file for your mod and the engine and editor will pick it up automatically. In this way behaviors can be added or edited in seconds and the editor immediately allows you to start using the Logics with an appropriate UI.

Rendering

As I’m sure you’re all aware, I’ve been adding software rendering support for a variety of reasons. Among these reasons include: increased compatibility, closer emulation of the original look, as a reference for figuring out and matching up the rendering for all modes as close as possible. However I ran into a problem: there are a variety of feature combinations that I wish to support and I don’t want to add a bunch of code or conditionals to the scanline rendering – since it is the most time consuming part of the rasterizer. What I had been doing is hand coding a variety of scanline drawing functions that used only the features that were necessary but it became obvious that this wasn’t going to scale. For example, the 32 bit renderer became out of date with the 8 bit renderer that I had been focusing on.

The shader system (OpenGL, shader-based renderer) uses #defines to allow a few shaders to be coded, the shader code actually used is based on this base code with the appropriate defines set. For every set of features shader code is generated that fulfills the requirements without requiring a large number of if statements in the shader code or having to write a bunch of redundant code that may become out of date.

So I basically decided to implement the same system for scanline rendering in C/C++. There is one routine for scanline rendering with defines for the various feature permutations. When the engine renders triangles, the appropriate permutation of the scanline rendering routine is used to render the triangles with as little code in the inner loop as possible. So now adding new features to the renderer has become easier without becoming out of date. Now the 32 bit renderer has all the features of the 8 bit renderer and vice versa, for example.

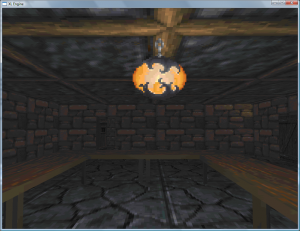

One feature that I added is translucent surface rendering, which now works even in the 8 bit renderer. This will allow for certain effects for every renderer, from simple things such as translucent backgrounds for UI windows and the console to additive blending for things like torch flame. Some examples of alpha blending in the 8 bit software renderer:

The way it works in 8 bit is through the use of translucency tables. Basically the final source palette index and the destination pixel index are fed into a table which contains the closest palette index to the proper blended result. This functionality will allow me to add other blending modes, such as additive, in the future. This also means that colored lighting could be added to the software renderer in the future and other filtering affects, as much as the palette allows anyway.

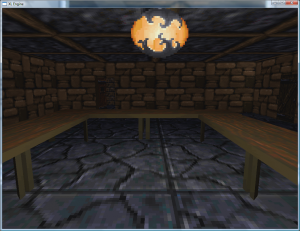

For the 32-bit renderer, I’ve been working on a color table that will be able to match the 32 bit color lighting/shading results to the original colormap better. This remapping is working, though I have some tweaking to go before it really does the job properly. However I’m getting some pretty positive results for things like the colormap used for underwater and for fog, I’ll show these next time. There is still much more contrast in the 32 bit renderer then the 8 bit renderer though, however the quality is improved in some ways. You can see for yourself in the comparison shots below. Clearly I still have more work to do in this area. ![]()

Once the 32-bit color map is working properly, I will use the same colormap with the shader based OpenGL renderer as well, allowing for much of the effects afforded by the colormap and the quality and speed of the hardware rendering. This is one example of how the software renderer is helping me improve the hardware rendering as well.

Below are the comparison shots. Notice that there is still more tweaking to be done before it matches, though. But the beginnings are working. ![]()