Written by Designer - Chris Wilson

[This is a catch up article on the development of our AI so far, taken from a number of dev diaries]

The AI will use the AI Perception system and behaviour trees, as I've come to really like them while messing around with other projects. It may seem like overkill now, but it should pay off later when things get more complex. I've broken down my tasks into 3 sections - Navigation, perception, suspicion.

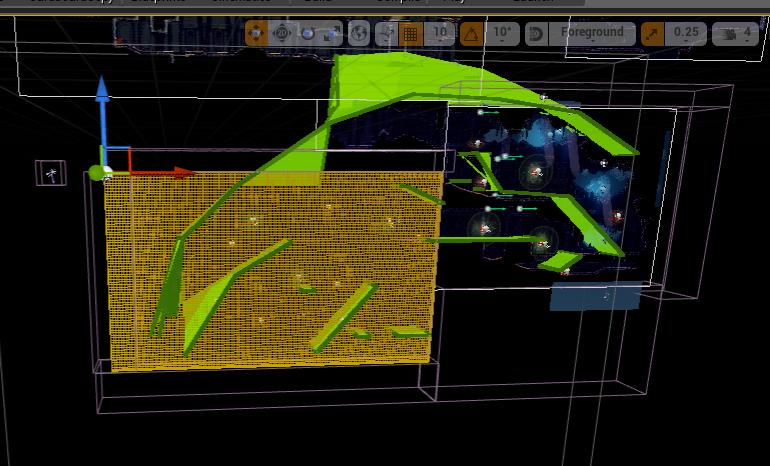

Navigation - All our tiles project out collision in 3d space, and then we can just place a nav mesh volume over this. Things started off bad, but got better by the end:

But got better by the end:

Perception - As said, we’re using the AI perception system here. It provides pretty much everything we need in a handy module, and plays nice with behaviour trees. At the moment I'm just using to check if a player can be seen in a very basic manner.

Suspicion - The suspicion system is what turns this from an action game into stealth...

This value ties to 3 states. This value decays whenever the player isn't in its sight, and the tree structure means the AI gracefully drops back down to the previous behaviour:

- Not suspicious - Continue patrolling

- Suspicious - stop and look in the direction of the suspicion

- Pursue - Move towards the source of the suspicion

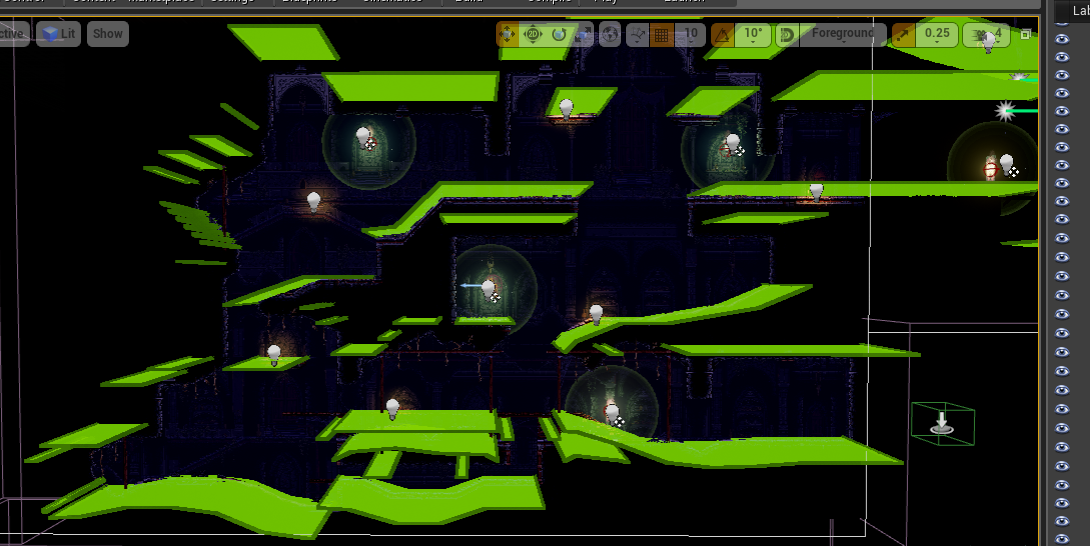

I managed to spend a bit of time working on the AI today. I had to make some changes to some related systems to allow everything to run properly in simulation mode. A few things relied on there being a controlled pawn within the world, so this was a good chance to clean that up. I also set-up some basic way points to allow the AI to patrol:

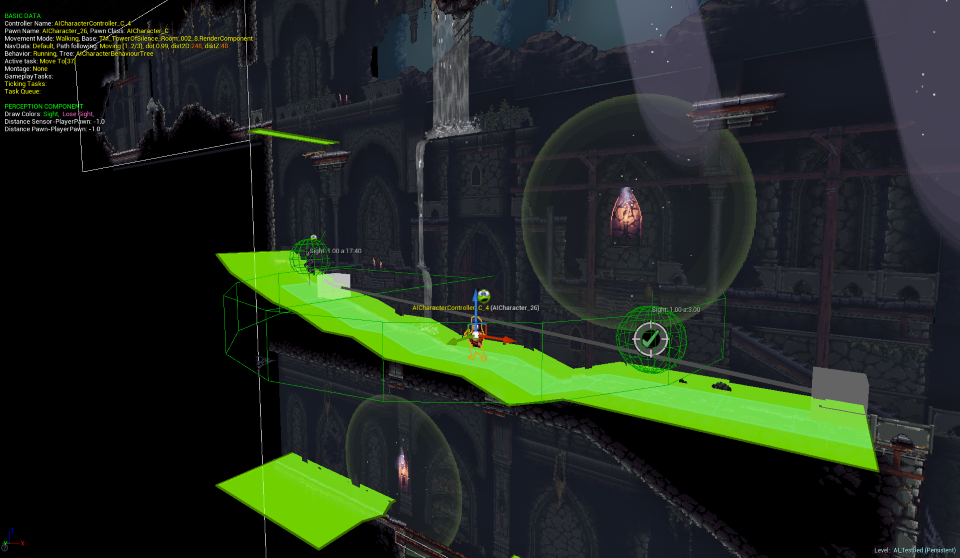

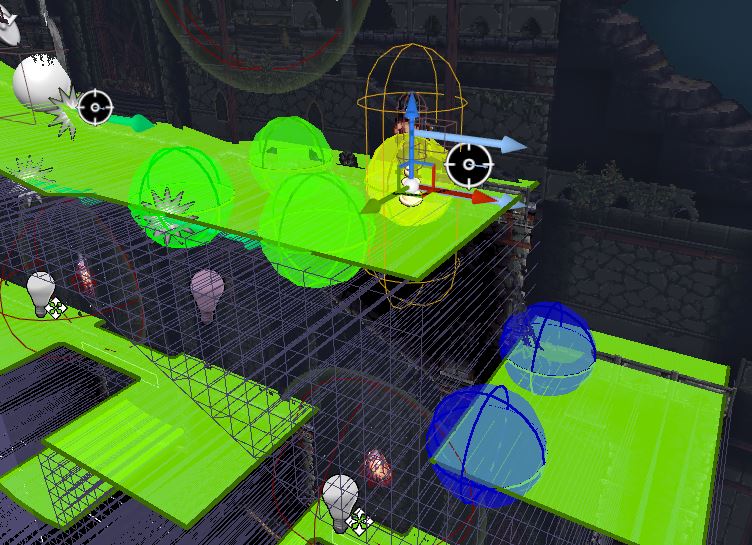

You can also see what I like to call the 'perception biscuit' as part of the gameplay debugging tool. As it's designed primarily for 3D games it's of limited use from the perspective, and the following image might give you a better idea of what's going on:

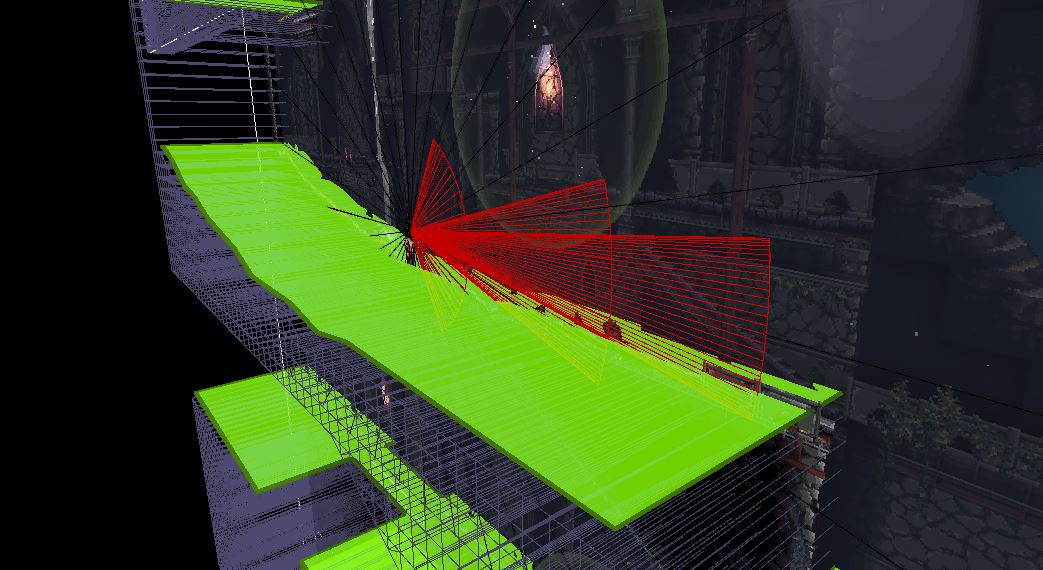

Creating a workable perception system for a 2D game in Unreal has proved a much bigger challenge than we had expected. However, despite the initial problems we’ve managed to come up with something perfect for our needs. One of our coders, Rex, did a great job in creating us a bespoke sense configuration for 2D:

What we now have is a collection of several 2D cones, which give a much better representation of the AI’s sight. We’ve also offset the position of the visibility raycast to go from eye to eye, rather than the centre of each character.

These two changes have made a massive difference to how it functions, and give us much more room to add interesting gameplay features. There’s plenty of tweaking ahead but by and large the AI sees you when you think it should, and hiding from it is also much more intuitive than before.

Speaking of tweaking these values, Rex also implemented a new debug to replace Epic’s own Gameplay debugger for our purposes. It easily lets us know which cone/s we are in, and if the AI is successfully able to raycast to the player.

EQS

Unreal Engine has a really powerful tool known as the ‘Environment Query System’. This system allows you to ask questions about the environment around you, and filter it down into useable bits of data. Using this hugely powerful tool for a 2D game is obviously overkill, but the key advantage is that they are very easy to create and test just using blueprint and the in-game editor. My aim wherever possible is for everything to remain completely readable by anyone on the team, so they can make their own improvements and suggestions.

At the moment I’m only implementing a few simple tests, for example: ‘find the nearest waypoint I can successfully plot a path to’ and ‘pick a random spot near to where I last saw the player’. Here's an example of picking a nearby valid point (the blue points are discarded as the AI can't reach them):

I’m looking forward to expanding these in the future using a few simple tricks to help the AI make smarter decisions without cheating too much. For example, when I create a query to pick a random spot near where the AI last saw the player, I can weight the results towards where the player actually is (even if they are hidden!).

Last Known Position

Another important aspect of our perception system is the concept of ‘last known position’, which I’ll refer to as LKP from now on to preserve my sanity!

Unreal’s perception system has it’s own concept of LKP, but we aren’t currently using it just yet. My simplified version positions an object whenever the player leaves the view cones. This position is then used as a start point for a search, should the AI reach the point and still not get a visual on the player.

Having this LKP object also allows me to deploy another classic stealth AI cheat which I like to refer to as ‘6th sense’.

Imagine a situation where I pass through an AI’s view cones heading in a direction it can’t see. How do I make the AI seem smart and know which way the player has gone? Sure, I could make it super complicated by using things like Dead Reckoning combined with multiple EQS to decide which cover the player is likely to be in. This is the sort of thing you’d find in Crysis or Halo, and as such is somewhat beyond our scope as a 2D game.

Instead, the LKP is updated for a short time (~0.5 seconds) after the AI has technically lost sight of them. From the player's perspective, this usually just looks like intuition, and would only look like cheating if the time is too long. As with most things in life, this is best explained with a gif.

The green tick in the cross hair is my LKP. See how it continues to update even after the player character leaves the view cones.

Suspicion

Suspicion is what we use to determine what the AI thinks is worth investigating, and later chasing after. The rate the suspicion increases is determined by whichever cone the player character is currently in. If they are in more than one cone, the cone with the higher rate of suspicion is used.

Currently we have 3 cones:

- Close Cone - Instant max suspicion. If the AI sees you here, it will chase immediately

- Mid Cone - Average level increase

- Far Cone - Small increase

Note that currently this suspicion system doesn’t take into account how well lit the player is. I’ll try adding a modifier based on that during the next pass as right now I just feel it’d muddy the waters while we test out the basics.

I also added some code to cover colliding with the the AI. Spoilers - they don’t like it very much.

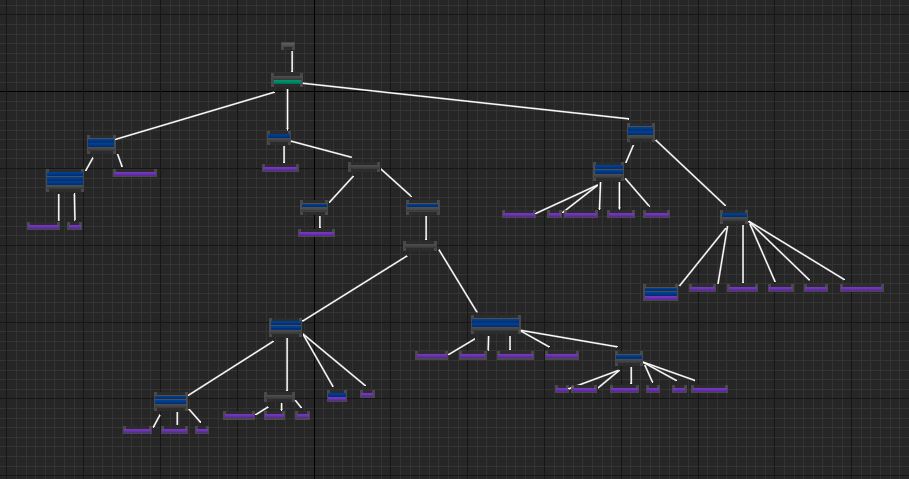

Behavior Tree

All these new features mean there’s a lot more going on in the Behavior Tree now. I think it’s time to start splitting these into separate behaviors . . .

There are a few other issues that have started to appear, now things are a bit more complicated - I have quite a few areas where I quite harshly abort sequences if things change (like for example the player leaves the AI’s sight) and these now cause visible hitching as the AI flip flops between two branches. I’ll need to take a step back and rethink some of these longer sequences and find some more graceful break points.

So, that’s all for now! What’s next?

- Hooking up all the animations

- Moving the view cone around (looking up and down, moving up and down slopes etc)

- Adding some placeholder sounds

- Lots of tweaking of the ‘magic numbers’ until it feels good